- Review

- Open access

- Published:

Indoor positioning and wayfinding systems: a survey

Human-centric Computing and Information Sciences volume 10, Article number: 18 (2020)

Abstract

Navigation systems help users access unfamiliar environments. Current technological advancements enable users to encapsulate these systems in handheld devices, which effectively increases the popularity of navigation systems and the number of users. In indoor environments, lack of Global Positioning System (GPS) signals and line of sight with orbiting satellites makes navigation more challenging compared to outdoor environments. Radio frequency (RF) signals, computer vision, and sensor-based solutions are more suitable for tracking the users in indoor environments. This article provides a comprehensive summary of evolution in indoor navigation and indoor positioning technologies. In particular, the paper reviews different computer vision-based indoor navigation and positioning systems along with indoor scene recognition methods that can aid the indoor navigation. Navigation and positioning systems that utilize pedestrian dead reckoning (PDR) methods and various communication technologies, such as Wi-Fi, Radio Frequency Identification (RFID) visible light, Bluetooth and ultra-wide band (UWB), are detailed as well. Moreover, this article investigates and contrasts the different navigation systems in each category. Various evaluation criteria for indoor navigation systems are proposed in this work. The article concludes with a brief insight into future directions in indoor positioning and navigation systems.

Introduction

The term ‘navigation’ collectively represent tasks that include tracking the user’s position, planning feasible routes and guiding the user through the routes to reach the desired destination. In the past, considerable number of navigation systems were developed for accessing outdoor and indoor environments. Most of the outdoor navigation systems adopt GPS and Global Navigation Satellite System (GLONASS) to track the user’s position. Important applications of outdoor navigation systems include wayfinding for vehicles, pedestrians, and blind people [1, 2]. In indoor environments, the GPS cannot provide fair accuracy in tracking due to nonline of sight issues [3]. This limitation hinders the implementation of GPS in indoor navigation systems, although it can be solved by using “high-sensitivity GPS receivers or GPS pseudolites” [4]. However, the cost of implementation can be a barrier to applying this system in real-world scenarios.

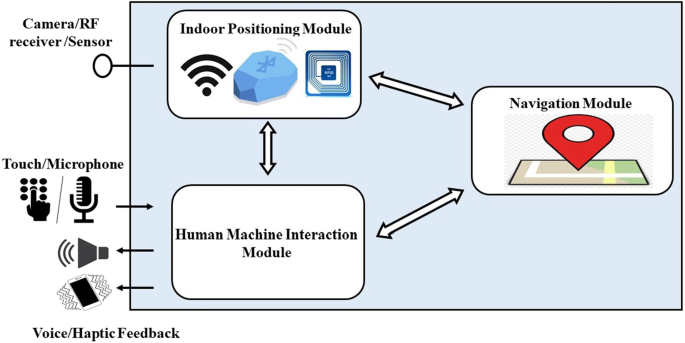

Indoor navigation systems have broad number of applications. The certain applications are wayfinding for humans in railway stations, bus stations, shopping malls, museums, airports, and libraries. Visually impaired people also benefit from indoor navigation systems. Unlike outdoor areas, navigation through indoor areas are more difficult. The indoor areas contains different types of obstacles, which increases the difficulty of implementing navigation systems. General block diagram of a human indoor navigation system is illustrated in Fig. 1.

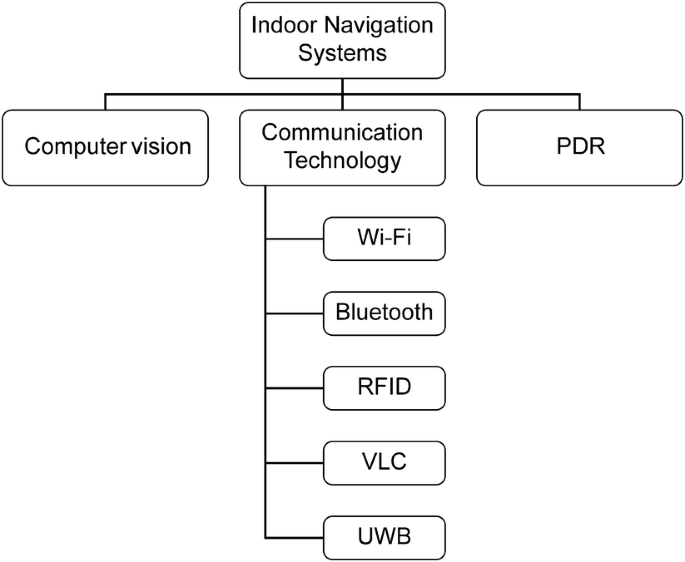

A human indoor navigation system mainly consists of the following three modules: (1) Indoor positioning system module, (2) Navigation module, and (3) Human–machine interaction (HMI) module. The indoor positioning system estimates the user’s position, the navigation module calculates routes to the destination from current location of the user, and the HMI module helps the user to interact with the system and provide instructions to the user. Since GPS-based indoor positioning is not effective, methods based on computer vision, PDR, RF signals are utilized for indoor positioning. Figure 2 illustrates the hierarchical classification of indoor navigation systems according to the positioning technologies adopted by them.

Computer vision-based systems employ omnidirectional cameras, 3D cameras or inbuilt smartphone cameras to extract information about indoor environments. Various image processing algorithms, such as Speeded Up Robust Feature (SURF) [5], Gist features [6], Scale Invariant Feature Transform (SIFT) [7], etc., have been utilized for feature extraction and matching. Along with feature extraction algorithms, clustering and matching algorithms are also adopted in conventional approaches for vision-based positioning and navigation systems. Apart from conventional approaches, computer vision based navigation systems utilized deep learning methodologies in recent years. Deep learning models contains multiple processing layers to study the features of input data without an explicit feature engineering process [8]. Thus, deep learning-based approaches have been distinguished among object detection and classification methods. Egomotion-based position estimation methods are also utilized in computer vision-based navigation systems [9]. Egomotion approach estimates the camera’s position with respect to the surrounding environment.

PDR methods estimate the user’s position based on past positions by utilizing data from accelerometers, gyroscopes, magnetometers, etc. The user’s position is calculated by combining the step length, the number of steps and the heading angle of the user [10, 11]. Since a greater number of position errors occur in dead reckoning approaches due to drift [12], most of latest navigation systems integrate other positioning technologies with PDR or introduced some sensor data fusion methods to reduce the errors.

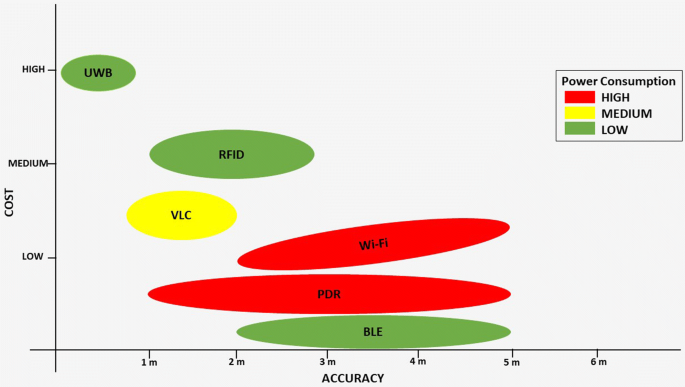

Communication-based technologies for indoor positioning includes RFID, Wi-Fi, visible light communication (VLC), UWB and Bluetooth. RFID systems consist of a RFID reader and RFID tags attached to the objects. There exist two types of RFID tags, namely, active and passive. Most of the recent RFID-based navigation systems have implemented passive tags since an external power source is not required. RFID-based systems utilize Received signal strength (RSS), Angle of arrival (AOA), Time of arrival (TOA) and Time difference of arrival (TDOA) for position estimation [13]. In indoor environments, however, all the methods except RSS may fail to estimate the user’s position accurately due to nonline of sight scenarios. The popular RSS-based positioning approaches are trilateration and fingerprinting [14]. RFID technology are widely implemented in navigation systems because of their simplicity, cost efficiency, and long effective ranges. Wi-Fi-based approaches are implemented in indoor environments, where we have sufficient numbers of Wi-Fi access points, and a dedicated infrastructure is not required; instead, these approaches can utilize existing building infrastructure because most current buildings will be equipped with Wi-Fi access points. Wi-Fi-based indoor navigation systems make use of RSS fingerprinting or triangulation or trilateration methods for positioning [15]. Bluetooth-based systems have almost similar accuracy as Wi-Fi-based systems and use Bluetooth low energy (BLE) beacons as source of RF signals to track the positions of users using proximity sensing approaches or RSSI fingerprinting [16]. In recent advances, smartphones are usually used as a receiver for both Bluetooth and Wi-Fi signals. VLC-based systems utilize the existing LED or fluorescent lamps within buildings, which makes VLC-based systems low cost. These LEDs or fluorescent lamps are becoming ubiquitous in indoor areas. The light emitted by lamps is detected using smartphone cameras or an independent photo detector. TOA, AOA, and TDOA are the most popular measuring methods used in VLC-based positioning systems [17]. UWB-based positioning systems can provide centimeter-level accuracy, which is far better than Wi-Fi-based or Bluetooth-based methods. UWB uses TOA, AOA, TDOA, and RSS-based methods for position estimation [18]. Comparison of various indoor positioning technologies in terms of accuracy, cost of implementation and power consumption are shown in Fig. 3.

The navigation module will determine the route of the user in the constructed indoor map with respect to user’s current position. The navigation module mainly consists of a map which represent the areas of indoor environment and a method to plan the navigation routes. The most commonly used methods for route planning are A* algorithm [19], Dijkstra’s algorithm [20], D* algorithm [21] and Floyd’s algorithm [22]. In addition, there exist some systems that provide mapless navigation. All these systems are discussed in the upcoming sections.

Human–machine interaction module allows the users to communicate with the navigation system such as to set up the destination as well as change the destination. The HMI module gives proper information and guidance to the users regarding route and location by means of acoustic feedback [23] or haptic feedback [24]. In the case of visually impaired ones, audio or vibration feedback is widely implemented in the HMI module.

In the past, significant number of attractive surveys about various indoor positioning technologies and indoor navigation systems were published [16,17,18, 25,26,27,28]. Most of these surveys mainly concentrated on positioning systems rather than the navigation system. In addition, they considered only a single technology such as wireless-based systems or visible light-based system or vision-based systems. In this work, we provide a summary of recent advancements and developments in the field of indoor navigation and positioning systems that utilize different types of approaches, such as computer vision, sensors, RF signals, and visible lights. The survey primarily deals with human navigation systems, including assisted systems for people with visual impairments (VI). In addition, some robotic navigation systems are also detailed in this paper.

Indoor positioning and wayfinding systems

Computer vision-based navigation and wayfinding systems

One of the main applications of indoor navigation is wayfinding for people with VI. ISANA [29] is a vision-based navigation system for visually impaired individuals in indoor environments. The proposed system prototype contains a Google tango mobile device, a smart cane with keypad and two vibration motors. The Google tango device has a RGB-D camera, a wide-angle camera, and 9 axes inertial measurement unit (IMU). The key contributions of ISANA are: (1) an “indoor map editor” to create semantic map of indoor areas, (2) “obstacle detection and avoidance method” that aids real-time path planning and (3) a Smart Cane called “CCNY Smart cane” that can alleviate issues associated with voice recognition software. The geometric entities in the floors, such as lines, text, polygons, and ellipses, were extracted by the indoor map editor from the input CAD model of the indoor areas. The indoor map editor can recognize the locations of rooms, doors, hallways, spatial and geometrical relationships between room labels, and global 2-dimensional traversal grid map layers. Prim’s minimum spanning tree algorithm is employed to draw out the above-mentioned semantic information. A novel map alignment algorithm to localize the users in the semantic map is proposed in ISANA. The proposed map alignment algorithm utilizes the 6-DOF pose estimation and area descriptive file provided by Google Tango VPS. The navigation module utilizes the global navigation graph constructed from the 2-dimensional grid map layer along with the A* algorithm for path planning. The safety of visually impaired individuals is guaranteed via obstacle detection, motion estimation and avoidance methods introduced in ISANA. To detect the obstacles, the ISANA will make use of the RGB-D camera to acquire depth data. The 3-dimensional point cloud or the depth data are rasterized and subjected to a denoising filter to remove the outliers. Three-dimensional points are aligned with the horizontal plane by utilizing the deskewing process. A 2-dimensional projection-based approach is introduced to avoid the obstacle, and it produces a time stamped horizontal map for path planning and time stamped vertical map for obstacle alerts. A connected component labeling approach-based algorithm [30] is adapted to detect the object to create horizontal and vertical maps. The Kalman filter is employed to reckon the motion of obstacles based on time-stamped maps. ISANA uses an Android text to speech library to speak out the instructions and feedback to the users and a speech to text module [31] to recognize user’s voice inputs. The CCNY smart cane provides haptic feedback to the user in noisy environments, and it also has a keypad to set the destinations and IMU to track the orientation of user and cane.

Tian et al. [32] developed a system for helping blind persons navigate indoor environments. The proposed system consist of door detector module and text recognition module. The separate module for the door detector consisted of the Canny edge detector and curvature property-based corner detector. The relative positions were detected by measuring the angle between the top edge of the door and the horizontal axis. A mean shift-based clustering algorithm was adopted for enhancing the text extraction by grouping similar pixels. A text localization model was designed by considering that texts have shapes with closed boundaries and a maximum of two holes. Text recognition was achieved by using Tesseract and Omni Page optical character recognition (OCR) software. The demonstrated results show that the false positive rate increased for the images acquired under challenging conditions, such as low light, partial occlusion, etc.

A wearable navigation system for people with VI by utilizing a RGB-D camera was proposed in [33], and it used sparse feature and dense point clouds for estimating camera motions. The position and orientation of the objects in the indoor environment were identified using a corner-based real-time motion estimator algorithm [34] and along with that, an iterative closest point algorithm was included to prevent drift and errors in pose estimation. A simultaneous localization and mapping (SLAM) algorithm provided the mapping [35, 36]. The modified D* lite algorithm helped the user route through the shortest path. Although the D* algorithm can handle dynamic changes in the surroundings, narrow changes in the map can cause the change in the produced walking path. This issue will make the navigation of people with VI more complicate. Normal D* algorithm generates the shortest path as a set of cells on the grid map. And it connects the current location and final destination by excluding untraversable cells. In this work, instead of directly following the generated set of cells, a valid waypoint point is generated in such a way that waypoint should be traversable as well as it should be located near to the obstacles at some distance. In the valid waypoint generation method, a point that is most far, visible as well as traversable from the current location is selected from the set of cells generated by the D* algorithm. Also, another point is selected, which is far, visible as well as traversable from the first selected point. Finally, a cell near to the first selected point and with less cost function is computed. However, some of the maps were inconsistent because the map merging technique was unable to correct for deformations in the merged maps.

The indoor wayfinding system for people with VI in [37] utilized Google Glass and Android phone. The proposed object detection method used the Canny edge detector and Hough line transform. Since walls may be one of the main obstacles in indoor environments, the floor detection algorithm identified the presence of walls by finding the stature of the floor region. However, the proposed object detection method failed for bulletin boards as well as indoor low contrast wall pixels.

In [38], the Continuous adaptive mean (CAM) shift algorithm was implemented with the D* algorithm for helping blind people navigate in indoor areas. The proposed method used image subtraction for object detection and histogram backpropagation for creating a color histogram of detected objects. The CAM shift algorithm provided tracking and localization of the users, and the D* algorithm helped the user calculate the shortest route between the source and destination.

Bai et al. [39] developed a vision-based navigation system for people with VI by utilizing a cloud-computing platform. The proposed prototype is made up of a stereo camera mounted on a helmet, smartphone, web application, and cloud platform. The helmet also contains a speaker and earphones to facilitate the human–machine interaction. The stereo camera acquires all the information about the surroundings and forwards it to the smartphone using Bluetooth. The smartphone will act as a bridge between users and cloud platform. All the core activities of the system, such as object or obstacle detection, recognition, speech processing, navigation route planning, is performed at the cloud platform. The cloud platform contains three modules, namely, speech processing, perception, and navigation. The speech processing module implemented a recurrent neural network-based natural language processing algorithm [40, 41] to analyze the user’s voice commands. The perception module makes the user aware of his surroundings and aid the blind people to live as a normal person, and it fuses object detection and recognition functions [42], scene parsing functions [43, 44], OCR [45, 46], currency recognition functions [47] and traffic light recognition functions [48] to improve the blind user’s awareness about the environment. All the functionalities in the perception module are based on deep learning algorithms. The navigation module implements a vision-based SLAM algorithm to construct the map. The SLAM algorithm will extract the image features of the surrounding environment and recreate the path of the camera’s motion. Preassigned sighted people use web application to provide additional support for blinds in complex scenarios.

Athira et al. [49] proposed an indoor navigation system for shopping mall. The proposed vision based system used GIST feature descriptor, and it enhanced the processing of captured images and reduced memory requirements. The main functions of the proposed system are keyframe extraction, topological map creation, localization, and routing. Keyframes are the important frames extracted from walkthrough videos that are used to create a topological map. For each frame, the L2 norm between two descriptors is calculated. If the L2 norm (Euclidean distance) exceeds a specific value, the frame is considered as “keyframe”. Consequently, the direction of key frames is detected by analyzing present frames with left and right parts of prior frames individually to create the map. Once the direction of keyframes is detected, 2D points are calculated in the map. For localization, images captured from user’s current position is compared with existing keyframes using the L2 norm.

Bookmark [50] is an infrastructure-free indoor navigation system that utilize existing barcodes of books in a library, and it facilitates the navigation of library visitors to any book’s position just by scanning the barcode of books in the library. Bookmark was developed as an application that can be used in any phone that has a camera, and it provides a detailed map of the library to the user. The detailed map contains locations of stairs, elevators, doors, exits, obstacles (pillars or interior walls) and each bookcase. Then, the map is converted to scalable vector graphics format, and the locations are represented by different color codes. To map the books in the library with the map, a book database of call numbers (a unique alphanumeric identifier associated with each book) and locations associated with call numbers is created. When a user scans the barcode of a book to know the location, the Bookmark’s server-side will collect information about the book from an existing library API. This information will contain details of the book, including the call number. The system will look up the call number inside the book database to retrieve the location for the user. Bookmark implements the A* algorithm to plan the route between two points of interest. Since Bookmark does not use a positioning technique, the system will be unaware about the current position of the user until he/she completes the navigation or until the next barcode is scanned. The major limitations of the system include the absence of barcodes on books and the misplacement of books on the wrong shelves.

Li et al. [51] proposed a wearable virtual usher to aid users in finding routes in indoor environments, and it consists of a wearable camera that captures pictures for frontal scenes, headphones to listen to verbal routing instructions to reach a specific destination, and a personal computer. The aim of the system is to aid users in wayfinding in an indoor environment using egocentric visual perception. A hierarchical contextual structure composed of interconnected nodes uses cognitive knowledge to estimate the route. The hierarchical structure can be presented in the following three levels: (1) top level, where the root node represents the building itself; (2) zones and areas inside the building; and (3) bottom level, which corresponds to the location inside each area. Generally, the structure illustrates the human mental model and the understanding of an indoor environment. SIFT has been used for scene recognition. A “self-adaptive dynamic-Bayesian network” is developed to find the best navigation route, and it is self-adaptive and can modify its parameters according to the current visual frame. Moreover, this network can address uncertainties in perception and is able to predict relevant routes. The obtained results demonstrated that the developed system is capable of assisting users to reach their destination without requiring concentration and a complex understanding about the map.

ViNav [52] is a vision based indoor navigation system developed for smartphones. The proposed system provides indoor mapping, localization and navigation solutions by utilizing the visual data as well as data from smartphone’s inbuilt IMUs. ViNav system is designed as a client–server architecture. The client is responsible for collecting the visual imageries (images and videos) and data from sensors including accelerometer, barometer, gyroscope, etc. The server will receive these data from the client and build 3-dimensional models from that. The server comprises of two modules. The first module is responsible for building 3-dimensional models of the indoor environment. Structure from motion technique is used to build 3-dimensional models from crowdsourced imageries captured by the client. The data from the accelerometer as well as gyroscope are utilized to detect trajectories of the user. Moreover, Wi-Fi fingerprints collected from the path traveled by the user are combined with the 3d model for localizing the user’s position in the indoor area. The second module facilitates the navigation of the user by calculating the navigation routes by using pathfinding algorithms. The data about the obstacles in the path are retrieved from constructed 3D models and navigation meshes are computed by adding pedestrian’s traveling path retrieved from crowdsourced user’s paths with obstacle’s data. Barometer readings are utilized to detect the stairs, elevators, and change of floors. The performance evaluation experiments demonstrate that ViNav can locate users within 2 s with an error, not more than 1 m.

Rahman et al. [53] proposed a vision-based navigation system using the smartphone. The proposed system is designed in a manner where the smartphone camera is enabled to capture the images in front of the user. The captured images will be compared with pre-stored images to check whether the captured image contains any obstacles. An algorithm is proposed for assisting people with visual impairments. The algorithm performs both obstacle detection as well as pathfinding tasks for the user. Once an image is captured by the smartphone, the obstacle detection technique will initially extract the region of interest from the image. The extracted region of interest will be compared with images in the database. If an obstacle is detected, the pathfinding technique will suggest an alternate path for the user. It is achieved by checking the right and left of the extracted region of interest. In a test environment proposed system achieved an accuracy of 90%.

Reference [54] examined the performance of three indoor navigation systems that utilize different techniques for guiding people with visual impairments in the indoor environment. The proposed work focused on the development of three navigation systems that utilize image matching, QR code, and BLE beacons respectively for localizing the user and testing of the developed navigation system in the realtime indoor environment. Image matching based indoor navigation system included a novel CNN model that is trained with thousands of images to identify the indoor locations. QR code-based system utilized existing QR code methods such as Zxing and Zbar. BLE beacons based method adopted a commercially available indoor positioning SDK to localize the user in indoor areas. All three navigation systems are implemented in a smartphone for real-time evaluation. Evaluation results show that QR code and image matching based methods outperformed the BLE beacons based navigation system for people with visual impairments in the indoor environment.

Tyukin et al. [55] proposed an indoor navigation system for autonomous mobile robots. The proposed system utilizes an image processing-based approach to navigate the robot in indoor areas. The system consists of “a simple monocular TV camera” and “color beacons”. The color beacons are the passive device that has three areas with different colors. All these colors can be visually identified, and the surface of the beacons are matte and not glowing. The operation of the proposed system can be classified into the three steps: (1) detection of the color beacons; (2) relative map generation, which identifies the location of the detected beacons in the indoor space with respect to the TV camera; and (3) identification of robot coordinates in the absolute map. An algorithm that contains different image processing techniques was introduced for beacon detection. Initially, the image from the TV camera was subjected to noise removal and smoothing of image defects using a Gaussian filter. After preprocessing, the image is converted to HSV. Then, the algorithm will choose each color in order, and a smooth continuous function is applied for the classification of pixels. Color mask images are generated by averaging the grayscale images from each HSV channel. Finally, the algorithm will recognize the pixel with the maximum intensity in the color mask and will fill pixels around it. The algorithm will repeat this step until all colors used in the beacons are processed. Once the center of the colored areas of the beacon is identified, the magnitude and direction of the vectors connecting the center of the colored area are estimated. There will be two vectors, with one connecting the center of the first and second colored area and the other connecting the center of the second and third colored area. The differences between these two vectors are used to identify the beacons. A navigation algorithm is introduced to estimate the coordinates of the beacon’s location and absolute coordinates of the TV camera. The relative coordinates of beacons were estimated using the beacon height and the aperture angle of the lens. The created relative map is an image where the relative positions of beacons and colored beacons are represented as dots. A three-dimensional transformation is applied to the relative coordinates of beacons to create the absolute map. The demonstrated results of the experiment using the proposed system show that the detection algorithm is able to detect the beacons only if it is within a range of 1.8 m from the TV camera. The average deviation in the calculated absolute coordinates was only 5 mm from the original value.

Bista et al. [56] proposed a vision-based navigation method for robots in indoor environments. The whole navigation process depends on 2-dimensional data of the images instead of 3-dimensional data of images utilized in existing methods. They depicted the indoor area as a collection of reference images that were obtained during the earlier learning stage. The proposed method enables the robot to navigate through the learned route with the help of a 2-dimensional line segment detector. To detect the line segment in the acquired images, a highly accurate and quick line detector called EDLine detectors [57] is employed. The indoor maps were created by utilizing key images and its line segments. During map construction, the first acquired image will be considered as a key image. The line segment of the first key image will be matched with the next image’s (second image) line segment to form a set of line segments. For matching line segments, a Line Band descriptor-based matching method was adopted [58]. Matching will be mainly based on Line Band descriptor, followed by the application of geometric constraints and filters to remove false matches. Once the matched set of the key image and the next image is obtained, the method will consider the next image (third image) and perform the same line segment matching between the first acquired key image and the current image. These steps will result in two-matched set of lines. A trifocal tensor is utilized to find the two-view matches between these two sets. The trifocal sensor is a “\(3\times 3\) array that contains all the geometric relationships among three views”, and it needs three-view correspondence between lines. Two-view matches (matching of the current image with the previous and next key image) utilized for initial localization and three-view correspondence generation. Three-view matches were used for mapping (matching the current image with previous, next and second next key image). The previous and next key images of a currently acquired image will share some line segment, which facilitates robot navigation and motion control. The rotational velocity of the robot is also derived from the three-view matches. The proposed navigation method was evaluated in three different indoor areas. The obtained drift in the navigation path of the robot was only 3 cm and 5 cm for the first two experiments. In the third experiment, a large drift was present in the path of the robot during the circular turn. The inclusion of obstacle avoidance module will be considered in future work to deal with the dynamic objects in the indoor environment.

Table 1 illustrates the comparison of computer vision-based indoor navigation systems.

Computer vision-based positioning and localization systems

The tasks of indoor localization, positioning, scene recognition and detection of specific objects, such as doors, were also considered in the context of indoor navigation since they can be extended for wayfinding in indoor areas.

Tian et al. [59] developed a method to detect doors for assisting people with VI to access unfamiliar indoor areas. The proposed prototype consists of a miniature camera mounted on the head for capturing the image and a computer to provide the speech output following the object detection algorithm. A “generic geometric door model” built on stable edge and corner features facilitates door detection. Objects with similar shapes and sizes, such as bookshelves and cabinets, were separated from the door using additional geographic information. The presented results indicate a true positive rate of 91.9%.

The Blavigator project included a computer vision module [60] for assisting blind people in both indoor and outdoor areas. The proposed object collision detection algorithm uses a “2D Ensemble Empirical Mode Decomposition image optimization algorithm” and a “two-layer disparity image segmentation algorithm” to identify adjacent objects. Two area of interests are defined near the user to guarantee their safety. Here, depth information at 1 m and 2 m are analyzed for retrieving information about the obstacles in the path from two distances.

An omnidirectional wearable system [61] for locating and guiding the individual in an indoor environment combined GIST and SURF for feature extraction. Two-levels of topological classification are defined in this system, namely, global and local. The global classification will consider all images as references, whereas the local classification will be based on prior knowledge. A visual odometry module was developed by integrating extended Kalman filter monocular SLAM and omnidirectional sensors. The system was trained using 20,950 omnidirectional images and tested on 7027 images. Localization errors were present due to misclassified clusters.

Huang et al. [62] developed an indoor positioning system called 3DLoc, which is a 3D feature-based indoor positioning system that can operate on handheld smart devices to locate the user in real time. This system solves the limitation that exists in previous indoor navigation systems based on sensors and feature matching (e.g., SIFT and SURF), and it considers the 3D signature of pictures of places to recognize them with high accuracy. An algorithm to obtain the signatures from pictures has been proposed. The algorithm is capable of robustly decoding those signatures to identify the location. At the first stage, 3D features are extracted from the captured pictures. Therefore, a 3D model is constructed using the obtained features using the indoor geometry reasoning [63]. Pattern recognition is then performed to identify the 3D model. The authors proposed a K-locations algorithm to identify the accurate location. An augmented particle filter method is used if the captured images are insufficient for recognizing the location due to information loss. Inertial sensors of the mobile device are used to provide real-time navigation of users under motion. Based on the conducted experiments, 90% of the exposed errors are within 25 cm and 2° for location and orientation, respectively.

iNavigation [64] combines SIFT feature extraction and an approximately nearest neighbor algorithm called ‘K-d tree based on the best bin’ first for positioning from the ordinary sequential images. Inverse perspective matching was used for finding the distance when an image was queried by the user. Dijkstra’s algorithm was implemented for routing through the shortest path. In this method, locations of landmark images were manually assigned. Therefore, further expansion of landmark image datasets requires a considerable amount of manual work.

Image processing-based indoor localization method [65] for indoor navigation utilizes the principal component analysis (PCA)-SIFT [66] feature extraction mechanism to reduce the overall running time of the system compared to that of SIFT- or SURF-based methods. It also implemented a Euclidean distance-based locality sensitive hashing technique for rapid matching of the images. The precision of the system increased up to 91.1% via the introduction of a confidence measure.

The localization algorithm [67] for indoor navigation apps consists of an image edge detection module using a Canny edge detector and text recognition module using stroke width transform, Tesseract, and ABBY fine reader OCRs. Tesseract is a free OCR software that supports various operating systems, and its development has been sponsored by Google. Tesseract can support the recognition of texts in more than 100 languages, including the languages written from right to left, such as Arabic. The ABBY fine reader OCR is developed by “ABBY”, a Russia-based company, and it supports approximately 192 languages. Further, its latest version is able to convert texts in the image files to various electronic documents, such as PDF, Microsoft Word, Excel, Power Point, etc. The experimental results proved that ABBY is quick and has high recognition accuracy on a benchmark dataset used in research on OCR and information retrieval.

Xiao et al. [68] proposed a computer vision-based indoor positioning system for large indoor areas using smartphones. The system makes use of static objects in the indoor areas (doors and windows) as the reference for estimating the position of the user. The proposed system contains mainly two processes as follows: (a) static object recognition and (b) position estimation. In the static object recognition process, initially, the static object is detected and identified by implementing the Fast-RCCN algorithm [69]. The included deep learning network is similar to VGG16 network [70]. The pixel coordinate of the “control points” (physical feature points on the static object) in the image is used for position calculation of the smartphone. The pixel coordinates of “control points” were calculated by analyzing the test image and identified reference image. The SIFT feature detector is adapted for the extraction of feature points from both the test and reference images. A homographic matrix is constructed from the matching feature point pairs of test and reference images. This homographic matrix and “control point” of reference images are utilized to find the “control point” of the test image. The collinear equation model of the “control point” in the image and “control point” in the space is calculated for the position estimation of the smartphone. The results show that the system has achieved an accuracy within 1 m for position estimation.

A visual indoor positioning system that makes use of a CNN-based image retrieval method was proposed in [71]. The system database contains images for each scene, and its CNN features, absolute coordinates and quaternion are provided with respect to a given local coordinate system and scene labels. In the offline phase, the CNN features of the images related to each scene were extracted using the pretrained deep learning VGG16 network. The proposed system consists of the following two online phases: (1) image retrieval task based on CNN and (2) pose estimation task. During the image retrieval phase, the CNN will retrieve most similar images (two images) with respect to the query image. In the pose estimation phase, the “Oriented Fast and Rotated Brief (ORB)” [72] feature detector is adapted for feature extraction of three images (test image and retrieved most two identical images). The feature points of the test image are matched with each similar image using the Hamming distance. The scale of the monocular vision is calculated from the pose of the two identical images and transformation of matches between pairs of the test image and identical image. The position and orientation of the test image is calculated by utilizing the monocular vision and transformation between the test image and the identical image. Images from two benchmark datasets, the ICL-NUIM dataset [73] and the TUM RGB-D dataset [74], were used for system evaluation. The average error in pose estimation using ICL-NUIM and TUM RGB-D was 0.34 m, 3.430 and 0.32 m, 5.580, respectively. In the ICL-NUIM dataset, the proposed system exhibited less localization error compared to PoseNet [75], 4D PostNet and a RGB-D camera pose estimation method that combines a CNN and LSTM recurrent network [76].

PoseNet is a 6 DOF camera relocalization system for indoor and outdoor environments using a deep learning network. The PoseNet used a 23 convolutional layer model that is similar to GoogLeNet [77] for classification. Caffe library was utilized for implementing the PoseNet model.

A considerable number of elderly people may fall and become injured because of aging. In this scope, a smartphone-based floor detection module for structured and unstructured environments that enables the identification of floors in front of the user is proposed in [78]. Structured environments are the areas that have a well-defined shape, and unstructured environments are the area with the unknown shape. In unstructured environments, superpixel segmentation was implemented for floor location estimation task. Superpixel segmentation will generate clusters of pixels, and they are then reshaped based on their color surroundings. For the structured environment, the Hough transform is used for line detection and the floor-wall boundary is represented by a polygon of connected lines. The results demonstrate that the system achieved an accuracy of 87.6% for unstructured environments and 93% for structured environments.

Stairs, doors, and signs are the common objects that can be used as reference points to guide people with visual impairments in indoor areas. Bashiri et al. [79] proposed an assistive system to guide people with visual impairments in indoor areas. The proposed system consists of two modules; a client mobile devices to capture the images and a processor server to detect the objects in the image. A CNN model was utilized to recognize indoor objects such as stairs, doors, and signs to assist people with visual impairments. The transfer learning technique was leveraged to build the object recognition CNN model. A popular CNN model AlexNet was utilized for the transfer learning method to create the new CNN model. The developed CNN model has evaluated in MCindoor 20000 dataset [80] and achieved recognition accuracy of more than 98%.

Jayakanth [81] examined the effectiveness of texture features and deep CNNs for indoor object recognition to assist people with visual impairments in indoor environments. The performance of three texture features LPQ, LBP, BSIF and CNN model built by the transfer learning approach using a pre-trained GoogleNet model was evaluated in this work. All of the proposed methods were evaluated in MCindoor 20000 dataset. Obtained results show that the CNN model built by the transfer learning approach using a pre-trained GoogleNet model achieved recognition accuracy of 100%. Although LPQ computation doesn’t require any high-performance computing tools like what CNN computation required, the LPQ feature descriptor displayed a similar performance compared to CNN for indoor object recognition.

Afif et al. [82] extended a famous deep convolutional neural network called RetinaNet for indoor object detection to assist the navigation of people with visual impairments in indoor areas. The proposed object detection network is comprised of a backbone network and a pair of sub-networks. Among two sub-networks, the first network will perform object classification and the second network will extract the bounding box as well as the class name of objects. Feature pyramid networks are used as a backbone of the proposed detection network. Feature pyramid network-based architecture can detect objects on various scales which improves the performance of multi scales predictions. Evaluation of the proposed object detection network was carried out in a custom dataset which contains 8000 images and 16 different indoor landmark objects. During the evaluation of the proposed detector, different backbone network architectures such as ResNet, DenseNet, VGGNet have been experimented with RetinaNet. RetinaNet with ResNet network outperformed all other combinations and achieved a mean average precision of 84.16%.

An object recognition method [83] for indoor navigation of robots was developed using a SURF-based feature extractor and bag-of-words feature vectors using Support Vector Machine (SVM) classifier. The nearest neighbor algorithm or RANSAC algorithm enabled feature vector matching. The proposed method was not able to recognize multiple objects in a single frame.

Table 2 presents a comparison of computer vision-based indoor positioning, indoor localization and indoor scene recognition systems.

Communication technology based indoor positioning and wayfinding systems

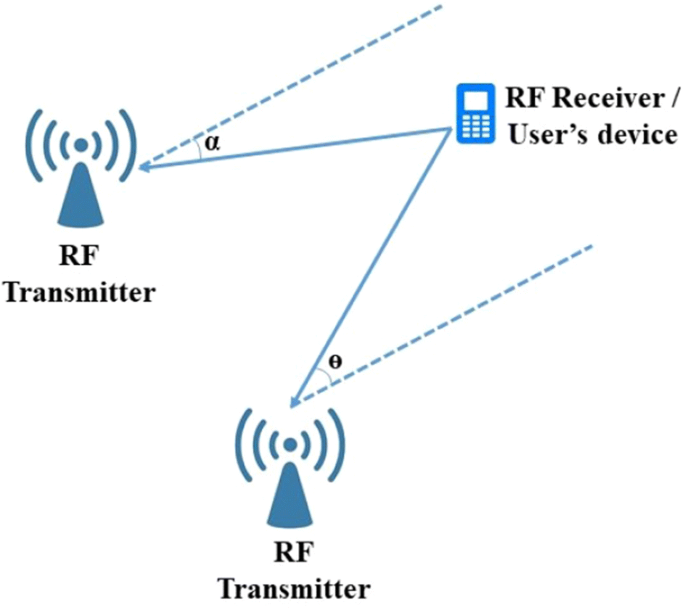

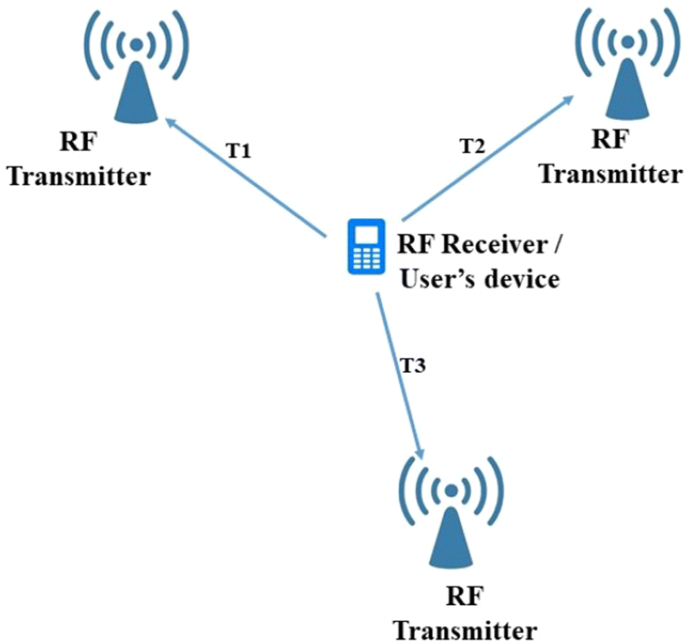

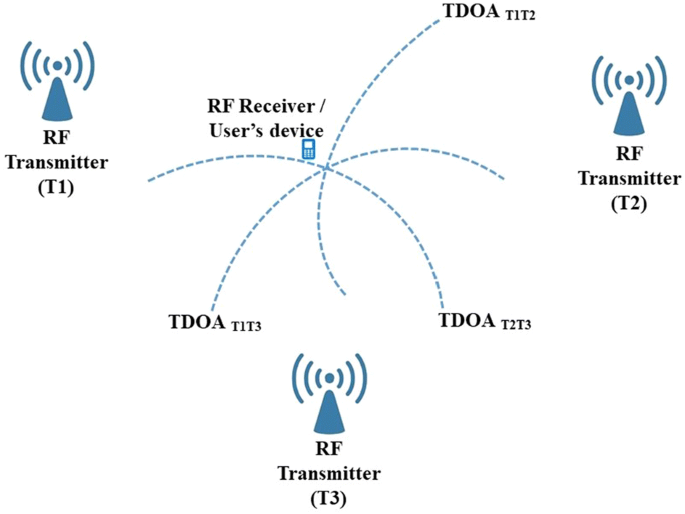

Communication technology-based positioning systems make use of various approaches to measure the signals from respective signal transmitting devices (Wi-Fi access point, BLE beacon etc.) installed in the indoor environments. The commonly used methods are time-based methods, angle-based methods, and RSS-based methods [84]. The time-based measurements include TOA and TDOA. The TOA approach utilizes the time taken for the signal propagation between the transmitter and receiver to find the range of the user, while the TDOA approach uses the difference of transmission time for two signals that have a different velocity. The angle-based method ‘AOA’ makes use of the angle of arrival at the target node to estimate target direction. The AOA measurement technique is rarely used in an indoor environment due to non-line of sight issues [85]. AOA and TOA based indoor localization approach are shown in Figs. 4 and 5 respectively.

TDOA method computes the difference between the TOA of the signals from two distinct RF transmitters to the mobile device. A TDOA value geometrically represents a hyperbola as shown in the figure. When there is more than one TDOA value, the intersection point of hyperbolas is estimated as the position of the mobile device. Figure 6 illustrate the TDOA based indoor localization approach.

Lateration, angulation, proximity, and radio fingerprinting are the main techniques used in communication technology-based systems for position estimation. The lateration technique calculates the distance between the receiver device and cluster of transmitting devices (access points, tags or beacons) that are attached in predefined locations. The angulation technique is similar to the lateration technique but considers the angle or phase difference between the sender and receiver instead of distance for position estimation [86].

The proximity technique is based on the proximity of the receiver to recently known locations. Compared with lateration and angulation, the proximity technique can provide a rough location or set of possible locations. The radio fingerprinting approach is an entirely different approach compared to the other techniques and does not consider the distance, angle or nearness between sender and receiver. Instead, a pattern matching procedure is applied, where the RSS or other signal properties at a location will be compared with the RSS for different locations stored in the database [87]. General steps involved in RSS fingerprint-based localization system are explained in Fig. 7. For pattern matching, different types of algorithms including Euclidean distance, machine learning algorithms such as KNN, SVM, etc are used in the literature.

FreeNavi [88] is a mapless indoor navigation system that relies on the Wi-Fi fingerprints of each landmark’s entrance in the indoor environment. Along with Wi-Fi fingerprints, walking traces of the users between two landmarks was utilized for creating virtual maps of the indoor environment. A lowest common subsequence (LCS) algorithm [89] that finds similarities between Wi-Fi fingerprints was adopted for virtual map creation as well as indoor localization. The LCS algorithm was developed to tolerate access point (AP) changes in regions where the concentration of the APs are high. To provide reliable navigation of users, two route planning algorithms were introduced in this system. One was for finding the shortest path between two landmarks while the other was for finding the most frequently traveled route. Both of the abovementioned algorithms were implemented using Floyd’s shortest path algorithms. FreeNavi was evaluated in a shopping center environment in Beijing by collecting the fingerprints of 23 landmarks and a total of 1200 m of traces. The virtual maps have a maximum accuracy of 91%, although an 11.9% error step rate was found in navigation because the user have to guess the travelling direction in junctions.

A Wi-Fi fingerprinting-based navigation system was proposed in [90]. The proposed system makes use of Wi-Fi fingerprinting combined with a radio path loss model for the estimation of locations. The position estimation algorithm was based on particle filter and K nearest neighbor (K-NN) algorithms. Dijkstra’s algorithm was implemented for the shortest path calculation between the source and destination. These authors also examined the performance of three fingerprint matching algorithms, namely, Kalman filter, unscented Kalman filter and K-NN. The results showed the average error while using each algorithm and the values were similar at approximately 1.6 m. However, K-NN had the greatest maximum error.

In a Wi-Fi-based indoor navigation system, the fluctuations in RSS can result in unfair positioning accuracy. To overcome these issues, a fingerprint spatial gradient (FSG) was introduced in [91]. The proposed method makes use of the spatial relationship of RSS fingerprints between nearby numerous locations. For profiling the FSG, these authors introduced an algorithm that picks a group of nearby fingerprints that advance the spatial stability as well as fingerprint likeness. A pattern matching approach is used for comparing the stored FSG and queried FSG using similarity measures, such as the cumulative angle function, cosine similarity or discrete Fréchet distance. The average accuracy of the position estimation was between 3 and 4 m.

In Wi-Fi-based indoor positioning and navigation systems, the radio fingerprinting approach has been used widely for estimating the position of the RF signal receiver. The fingerprinting approach follows a pattern matching technique where the property of the currently received signals is compared with the properties of the signal stored during the offline or training phase. In the last 10 years, various machine learning algorithms such as SVM [92], KNN [93], neural networks [92] have been utilized for pattern matching in radio fingerprint-based indoor localization methods. Compared to traditional machine learning algorithms, deep learning algorithms such as CNN, RNN, etc have demonstrated their effectiveness in various tasks such as image classification, text recognition, intrusion detection, etc. In this context, in recent years deep neural network-based approaches [94, 95] have been used in fingerprint-based indoor localization systems.

Jin-Woo et al. [96] proposed an indoor localization system that utilizes CNN for the Wi-Fi fingerprinting task. Since the fluctuations in RSS and multipath issues can cause errors in location estimation, training with few data can lead to the development of ineffective models. The proposed method utilized 2-D radio maps as inputs to train the CNN model. The 2-D virtual map for the input has been created from the 1-D signals. The developed deep CNN architecture consists of four convolutional layers, two max-pooling layers, and two fully connected layers. Even though It is a lite deep CNN model it has outperformed all other deep neural network-based methods proposed before that and achieved a mean accuracy of 95.41%. Since 2-D radio maps are used for training the deep CNN, it can learn signal strength and topology of radio maps. This approach makes the proposed system robust to the small RSS fluctuations.

Mittal et al. [97] have adapted CNN for Wi-Fi based indoor localization system for mobile devices. The proposed work presents a novel technique for Wi-Fi signature to image transformation and a CNN framework namely CNN-LOC. Instead of training with the available dataset, they have constructed their database by collecting RSSI data from a test environment. One of the novelties of the proposed work is the conversion of RSSI data to image data. For each location, the collected RSSI data are converted to the grayscale image using the Hadamard product method. Similar to [14], this work has used a lite deep CNN model which comprises of five CNN layers. To improve the scalability of the system, CNN-LOC is integrated with a hierarchical classifier. Hierarchical classifiers are used to scale up the lite or small CNN architecture for larger problems. The proposed hierarchical classifier consists of three layers where the first layer is used to find the floor number, the second layer for detecting the corridor and the third layer for estimating the location of the mobile device. The system has been tested in 3 indoor paths extended over 90 m. The obtained results show that the average localization error was less than 2 m.

Ibrahim et al. [98] proposed an advanced approach to improve the localization accuracy by reducing randomness and noise found in RSS values. The time series of RSS values are applied to CNN as input. The hierarchical architecture of CNN was employed for predicting the fine-grained location of the user. The first layer is responsible for detecting the building, second and third layers will predict floor number and location of the user respectively. The proposed CNN model was evaluated in the UJIIndoorLoc dataset. The dataset consists of the Wi-Fi RSS fingerprints collected from multiple-multi storied buildings. Demonstrated results show that the proposed hierarchical CNN predicts the building and floor with an accuracy of 100%. The average error in localization is 2.77 m which acceptable in the case of Wi-Fi-based systems.

Li et al. [99] proposed a multi-modal framework for indoor localization tasks in the mobile edge computing environment. Presented work focuses on the multiple models’ based localization approaches, its drawback and finally proposes theoretical solutions to overcome its shortcomings. There exist many machine learning models for RSS based indoor localization tasks. Even though they displayed their effectiveness in the test environment, but failed to repeat the same performance in practical situations. There are many factors like refrigerators, temperature, doors in indoor areas which can affect the localization performance. Theoretically, building distinct models for distinct surroundings is an effective method for indoor localization. But multiple models based approaches will also have drawbacks. Too many models have to be built, the presence of unstable factors which affect RSS are the major drawbacks. To solve these issues, two combinatorial optimization problems are formulated: external feature selection problem and model selection for location estimation problem. NP-hardness of the problems is analyzed in this work.

Wireless technology based indoor localization systems are prone to errors because of non-line of sight issues, inconsistency in received signals, fluctuation in RSS, etc. In large scale wireless-based localization systems, while comparing with the number of sensors, information is sparse. The main challenge in these systems is recovering the sparse signals for further processing to localize the user. Compressive sensing is a popular signal processing technique to efficiently acquire and reconstruct signals. This technique is used in wireless-based indoor positioning systems [100, 101] to recover sparse signals efficiently. Many of the existing compressive sensing techniques are intended to solve the issues for a single application and it lacks dynamic adaptability. Zhang et al. [102] proposed a learning-based joint compressive technique to solve the challenges in compressive sensing techniques. They introduced a learning technique that can learn the basis of sparse transformation from compressive sensing measurement output instead of historical data. Acquiring a big amount of historical data is costly and learning from specific historical data can affect the dynamic adaptability.

A hybrid navigation system that combines magnetic matching (MM), PDR and Wi-Fi fingerprinting was proposed in [103]. Since such systems combine different approaches, the user can even navigate through the regions were Wi-Fi signals are poor or environments have indistinctive magnetic feature. The location of the user was resolved by calculating the least value of the mean absolute difference between the estimated fingerprint or magnetic profile and the predetermined value of the respective candidate in the database. An attitude determination technique [104] and PDR [105] method were integrated for implementing the proposed navigation algorithm. To improve the Wi-Fi and MM results, three separate levels of quality control method using PDR-based Kalman filter were introduced. The results demonstrated that the proposed method has an accuracy of 3.2 m in regions with sufficient number of APs and 3.8 m in regions with poor numbers of APs.

iBill [106] integrates an iBeacon device, inertial sensors, and magnetometer to localize the users in large indoor areas using a smartphone. iBeacon is a variant of BLE protocol developed by Apple Inc. [107]. The proposed system contains two operational modes. If the user is within the range of the beacons, then a RSS-based trilateration algorithm is adopted for localization. Otherwise, the system will enter the particle filter localization (PFL) mode, which considers magnetic fields and data from inertial sensors for localization. Since the PFL mode itself cannot compute the initial position of the user, it will assume the last location obtained in the iBeacon localization mode as the initial position of the user. The accelerometer data and gyroscope data are used for updating the location and direction of the particles, respectively. These particles are utilized to represent the walking distance and direction of the user. To overcome the limitation of using magnetic fields only for assigning weights for a particle in the particle filter method, the system considered the probability distribution of step length and turning angles of particles to determine the weight. The iBILL system reduced the computational overhead of PFL and solved the limitations associated with the unknown initial location and heavy shaking of smartphone. iBill achieved less error in localization compared to the dead reckoning approach and Magicol [108]. Magicol system combines magnetic fields and Wi-Fi signals using a “two-pass bidirectional particle filter” for localization. Magicol consumes less power compared to systems that rely only on Wi-Fi signals. In the Magicol and dead reckoning approaches, the error in localization increased drastically while walking for more steps. However, iBill showed consistency in localization accuracy while walking for long time (more steps case) also.

Lee et al. [109] proposed an indoor localization system that utilizes inbuilt sensors in smartphones, such as Bluetooth receivers, accelerometers, and barometers. The RSSIs of the signal received from Bluetooth beacons are used for location estimation with the help of the trilateration algorithm. PDR has been used to reduce the uncertainty in RSS identifiers, which improved location estimation by tracing the direction and steps of a normal user. Atmospheric pressure determined using a barometer was utilized for vertical location estimation. Due to the limitations of sensors in smartphones, the proposed method could not deliver satisfactory results in real-world scenarios.

A simple but efficient Bluetooth beacon-based navigation system using smartphones was proposed in [110]. The system utilizes RSSI measurements for position estimation. The positioning algorithm [111] initially measures the RSSI from each beacon and perform a noise removal operation. The “Log-Path Loss model” [112] based on the mean of the RSSI values is utilized for the estimation of distance from each beacon. The algorithm implements the proportional division method to estimate the position of the users when they are near to two or more beacons. In the proportional division method, the line representing corridor where beacons are installed is divided with respect to the distance between two nearby beacons. When only one beacon is near to the user and another one is far, the algorithm assumes the user’s position is on the other side of that nearby beacon. Dijkstra’s shortest path algorithm was adopted for finding the shortest route for navigation. The system performed well in a small indoor region.

DRBM [113] is a dead reckoning algorithm that combines a “Bluetooth propagation model” and multiple sensors for improved localization accuracy. The “Bluetooth propagation model” utilized the linear regression method for feature extraction. An individual parameter that varies with the characteristics of the users was integrated with data from accelerometers for calculating the exact steps covered by the user. Subsequently, the results from Bluetooth propagation model and sensor-based step calculation method were fused using a Kalman filter for improving the accuracy of positioning. The results demonstrated that the positioning errors were within 0.8 m.

Reference [114] examined the performance of machine learning classifiers, such as SVM, Random Forest and Bayes classifier, for the Bluetooth low-energy beacon fingerprinting method. The experimental infrastructure was created using beacons provided by Estimote and iBeeks. Both types of beacons use Eddystone profiles developed by Google. These authors evaluated the performance of algorithms for different smartphones with a preinstalled fingerprinting Android app. Eddystone packets from each beacon are scanned over a period of time to obtain the RSSI values. The MAC of the beacon and associated RSSI values are logged for further training processes. The open source project 'Find' was adapted for the whole task. Several machine learning algorithms are already available in ‘Find’ servers. The results showed that Random Forest increased the accuracy of positioning by 30% compared to the Bayes classifier and a 91% correct identification of location.

In recent years BLE beacons based technology has been used for the development of assistive navigation systems for people with visual impairments. A blind or visually impaired user with a minimum knowledge of smartphones can utilize these systems to find the indoor ways in train stations, museums, university premises, etc. Basem et al. [115] proposed a BLE beacons based indoor navigation system for people with visual impairments. The proposed system utilized the fuzzy logic framework for estimating the position of the user in indoor areas. The basic methodology utilized for indoor positioning is BLE fingerprinting. Authors analyzed the performance of various versions of the fingerprinting algorithm including fuzzy logic type 1, fuzzy KNN, fuzzy logic type 2 and traditional methods such as proximity, trilateration, centroid for indoor localization. The fuzzy logic type 2 method outperformed all other methods. The average error of localization obtained in the fuzzy logic type 2 approach is just 0.43 m.

Murata et al. [116] proposed a smartphone-based indoor localization system that can be extended for blind navigation in large indoor environments that contains multistoried buildings. The proposed work addressed six key challenges for smartphone-based indoor localization in large and complex environments. The challenges are associated with the mobility of the user and the nature of large scale environments. The challenges include accurate and continuous localization, scaling the system for multiple floors, Varied RSS values from the same transmitter to different devices located in the same location, varied walking patterns of individuals, signal delay, etc. The authors improved the probabilistic localization algorithm using various techniques to address the above-mentioned challenges. RSSI from BLE beacons and data from embedded IMUs in the smartphone are utilized for location estimation. The proposed system was evaluated in a large shopping mall (21,000 m2 area) with 10 individuals including blinds and people with low vision. It is observed that the proposed techniques reduced the mean localization error from 3 to 1.5 m while using the probabilistic localization algorithm.

Ahmetovic et al. [117] proposed a smartphone-based indoor navigation system for people with visual impairments. The proposed system namely, NavCog relies on RSSI from BLE beacons and inbuilt sensors of smartphones for localizing the user in indoor areas. The location of the user was estimated using a fingerprint matching algorithm. There are many fingerprint matching algorithm proposed in the literature. Here, the author chose a variant of the KNN algorithm to compute the location of the user by matching the observed RSSI value with RSSI fingerprints stored during the offline stage. Apart from basic localization and navigation service, NavCog can notify the user about their surroundings regarding the point of interest or stairs or elevators etc. NavCog was evaluated in a university campus with the help of six people with visual impairments. They recorded all the experiments using a video camera to see whether the user is missing any turn during navigation, waiting for instructions, hitting any obstacles, etc. Current version of NavCog lacks the functionality to notify the user when they are traveling in the wrong way.

Kim et al. [118] proposed a smartphone based indoor navigation assistant for people with VI impairments. The system namely, StaNavi uses the smartphone and BLE beacons attached in the indoor areas to guide the users in a large train station. Along with the RSS from BLE beacons, data from the inbuilt compass of the smartphone is utilized to estimate the position and orientation of the visually impaired users. A commonly used indoor localization method called proximity detection technique was used to compute the user’s position. The StaNavi system makes use of a cloud-based server for providing navigation route information. Similar to StaNavi, GuideBeacon [119] indoor navigation system also utilizes the smartphone compass and BLE beacons to estimate the position and orientation of the visually impaired users in the indoor environment. But GuideBeacon used the low-cost BLE beacons to facilitate indoor tracking. The position estimation procedure includes identification of the nearest beacons for a user by using the proximity detection technique. GuideBeacon can provide audio, haptic and tactile feedback to the visually impaired user. Reference [120] proposed an indoor navigation system for people with visual and hearing impairments. The proposed system utilized proximity detection as well as nearness to beacons techniques in localization algorithm to track the position of the user. It is noted that in the last 5 to 6 years only the development of BLE beacons based navigation systems have become popular. It can be due to the availability of smartphones for low cost, less cost of beacons compared to other RF transmitters which have been used before. In the case of Blind navigation, only a few BLE beacon based systems have been proposed in recent years.

ISAB [121] is a wayfinding system developed for assisting people with VI in libraries, and it utilizes various technologies such as Wi-Fi, Bluetooth, and RFID. Here, each communication technology was used for different purposes. First, Wi-Fi fingerprinting was used for localization and navigating through the entrance of the building to the desired floor. Floor plans of the indoor environment were represented as graphs, and Dijkstra’s algorithm was implemented for path planning. Bluetooth technology was used for navigating users to the desired shelf where the desired item is placed. Each shelf contains a shelf reader where a Bluetooth module is attached. The user can pair their smartphones with this Bluetooth module, and the shelf reader will provide instructions to the user. Finally, RFID technology was implemented to find the desired item on the shelf, where each item is embedded with a RFID tag. Additionally, an effective user interface was developed for simplifying interactions of blind people with the system. The proposed system helped the users to reach towards a target with a maximum accuracy of 10 cm.

PERCEPT [122] is a RFID technology-based navigation system developed for people with VI. PERCEPT consists of passive RFID tags pasted on the indoor areas, a “glove” that consists of a RFID reader, and kiosks placed at entrances, exits of landmarks. The kiosks contain information about key destinations and landmarks. Additionally, an Android smartphone that provides instruction to the user through a text to speech engine. An Android phone will communicate with the glove and PERCEPT server using Wi-Fi, and Bluetooth. The directions provided by the PERCEPT system lacks proximity. Moreover, the direction was not presented in terms of steps or feet.

PERCEPT II [123] includes a low-cost navigation method using smartphones alone (gloves were omitted). The cost for system deployment was decreased by creating a survey tool for orientation and mobility that aids in labeling the landmarks. NFC tags were also deployed in specific landmarks for providing navigational instructions by means of audio. The navigation module implemented Dijkstra’s algorithm for route generation.

A RFID-based indoor wayfinding system for people with VI and elderly people was proposed in [124]. The proposed system consists of a wearable device and a server. The wearable device consists of a RFID reader that can read passive tags, an ultrasonic range finder for detecting obstacles in the path and a voice controller. The server comprises of a localization module as well as navigation module. The navigation module implements Dijkstra’s algorithm for path planning. For efficient localization, authors considered the normal movements of a person with vision while developing the system. The navigation module was linked to an obstacle avoidance algorithm where obstacles are categorized as expected and unexpected by assigning a predefined probability measure. Again, these obstacles were categorized as mobile and fixed and a triangle set is formulated for detecting mobile obstacles. An earphone was also embedded in the system for providing effective guidance to the user.

Roll Caller [125] introduced a method that relates the location of the user and the targeted object based on frequency shifts caused in the RFID system. The Roll Caller prototype comprises of passive RFID tags attached to items, RFID reader with multiple numbers of antennas, and a smartphone with inertial sensors, such as accelerometers and magnetometers. An anchor timestamp was used to represent the value of the frequency shift. This anchor time stamp was integrated with inertial measurements, such as acceleration and the direction from the sensors of smartphone for allocating antennas. The proposed method reduced the system overhead since the location of person and item are not calculated separately. Instead, a spatial relationship between the object and users was introduced to locate them.

DOVI [126] combined IMU and RFID technology to assist the people with VI in indoor areas. DOVI’s navigation unit consists of a chip (NavChipISNC01 from InterSense Inc.) has a three-axis accelerometer, barometer, and magnetometer. An extended Kalman filter was included for compensating the sensor and gravity biases. While RFID module was implemented to reduce the drift errors in IMU. Dijkstra’s algorithm was implemented to estimate the shortest navigation routes. A haptic navigation unit was present in DOVI that provides feedback/instruction to the user about navigation by means of vibration.

Traditional RFID positioning algorithms were facing fluctuations in location estimation due to multipath and environmental interference in RFID systems. To take care of this issue a new positioning algorithm called BKNN is introduced in [127]. BKNN is the combination of Bayesian probability and K-NN algorithm. In the implemented UHF-RFID system, RSS values were analyzed using Gaussian probability distribution for localization. The irregular RSS were filtered out using Gaussian filters. Integration of Bayesian estimation with K-NN improved the localization accuracy. Hence, the average error in location estimation of the proposed system was approximately 15 cm.

A VLC technology-based navigation system that utilizes existing LEDs inside an indoor environment was proposed in [128]. The proposed system comprises of four LED bulbs attached to the ceiling of the room and they were interconnected using the same circuit to operate as a single optical transmitter. Trilateration algorithm was implemented for locating the receiver/user. Target’s path was tracked using Kalman filtering and sequential important sampling particle filtering methods. They also examined the performance of the Kalman filter and particle filter for tracking the users. The demonstrated results show that particle filter is better compared to the Kalman filter for user tracking.

AVII [129] is a navigation system for visually impaired people using VLC technology. Along with VLC-based positioning, a geomagnetic sensor was introduced for providing accurate direction. A sonar sensor was also embedded in the system for detecting obstacle along the navigation path. Dijkstra’s algorithm was modified and utilized in the system that enables the user to select the best and shortest navigation routes. The system give instructions to the user through the embedded earphone in the form of audio signals.

In [130], a VLC-based positioning system was integrated with magnetic sensors of the Android smartphone for assisting people with VI in indoor environments. The proposed prototype consists of an Android phone for calculating the position of the user. A speech synthesizer system inside a smartphone provides instructions to users through the earphone. The latitude and longitude of each location will be stored as visible light ID in each visible light associated with that location. Once the visible light receiver obtains information about the light ID from visible light, it will transmit the ID to a smartphone via Bluetooth. The smartphone integrates this information with the directional calculation from geomagnetic sensors and provides route instructions to the user. The smartphone was attached to a strap that hung freely around the user’s neck. Due to the irregular motion of the users, the strap swung more than expected, which led to errors in reading the geomagnetic sensor and errors in position estimation.

Reference [131] proposed a method of mitigating random errors in inertial sensors and removing outliers in a UWB system. Multipath and nonline of sight conditions were the reason for outliers in the UWB systems. The proposed system consists of a UWB system and an inertial navigation system. The inertial navigation system consists of an accelerometer, a gyroscope, and a magnetometer. The UWB system makes use of the TDOA method and least square algorithm for position estimation. An “anti-magnetic ring” was introduced to remove the outliers in the UWB system under non-line of sight conditions, and it was the first method to do so. For improved positioning accuracy, the information from the accelerometer and UWB system was fused using a “double-state adaptive Kalman filter” algorithm based on the “Sage-Husa adaptive Kalman filter” and “fading adaptive Kalman filter”. The results showed that the inclusion of “anti-magnetic ring” and “double state adaptive Kalman filter” algorithm reduced the positioning errors.

Table 3 provides a comparison of communication technology-based indoor navigation and positioning systems.

Pedestrian dead reckoning based indoor positioning and wayfinding systems

Hsu et al. [132] developed a system that only depends on inbuilt sensors of smartphones and is devoid of any external infrastructure. Here, the user’s steps were detected by integrating values of acceleration along three axes obtained from accelerometer data. The user’s step length was calculated by combining the maximum acceleration values and minimum acceleration values. Since step length varies with the person, an individual parameter that varies with the users was also fused with the maximum and minimum acceleration values to detect steps. Direction changes of the users were decided from the data provided by the gyroscope. Since the PDR approaches may result in errors in localization, a calibration mark is provided in the map and floor. The main limitations of this work were the variations in the data provided by sensors caused by the holding position of phones (like in pocket or bag) and the absence of a path planning algorithm, which increased the difficulty of navigation.

Hasan and Mishuk [133] proposed a PDR-based navigation method for smart glasses. Since PDR methods need sensors for acquiring data, these authors introduced the smart intelligent eye ware called “JINS-MEME”, which contains a three-axis accelerometer and gyroscope. Usually, PDR methods will calculate the current position of a user from his last known position; therefore, the initial position of the user should be known for tracking his position. Calculation of the current position of a user requires the step length of the user, number of steps covered by the user from his last known position and azimuth or heading angle of the user. Data from the three-axis accelerometer is used for step detection process. If the sensors are mounted on foot, then step detection can be achieved by utilizing the zero-velocity update. However, the sensors in this system were attached on to the smart glass, and the norm of the accelerometer was utilized for detecting steps. If the norm of the accelerometer crosses a predefined value, it is then considered as one step. Since step length varies with the user, a parameter is included for step length calculation and these parameter is obtained from an experiment where 4000 steps of 23 people were analyzed. In addition, the “extended Kalman filter” was introduced to merge the values from accelerometer and gyroscope. The data merging approach was utilized for calculating the heading angle to rectify the errors of PDR and gyro sensor such as bias, noises, tilts, etc. Instead of using data from sensors individually, fused data are more accurate for further calculation.